Optimising performance and security of web-based software

On-demand applications are often talked about in terms of how suppliers should be adapting the way their software is provisioned to customers.

On-demand applications are often talked about in terms of how software suppliers should be adapting the way their software is provisioned to customers.

These days, however, the majority of on-demand applications are provided by user organisations to external users – consumers, users from customer or partner organisations, and their own employees working remotely.

More direct online interaction with consumers, partners and other businesses should speed up processes and sales cycles and extend geographic reach – those organisations that do not do so will be less competitive.

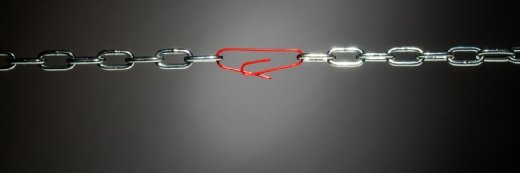

But there are two big caveats. First, benefits will only be gained if the applications perform well and have a high percentage of uptime – approaching 100% in many cases. Second, any application exposed to the outside world is a security risk, vulnerable to attack either as a way into an organisation’s IT infrastructure through software vulnerabilities or to stop the application itself from running effectively – application-level denial of service (DoS) – thereby limiting a given organisation’s ability to continue with business, and often damaging its reputation as a result.

So, how does a business ensure the performance and security of its online applications?

Features of an application delivery controller

- Network traffic compression – to speed up transmission.

- Network connection multiplexing – making effective use of multiple network connections.

- Network traffic shaping – a way of reducing latency by prioritising the transmission of workload packets and ensuring quality of service (QoS).

- Application-layer security – the inclusion of web application firewall (WAF) capabilities to protect on-demand applications from outside attack, for example application-level denial of service (DoS).

- Secure sockets layer (SSL) management – acting as the landing point for encrypted traffic and managing the decryption and rules for ongoing transmission.

- Content switching – routing requests to different web services depending on a range of criteria, for example the language settings of a web browser or the type of device the request is coming from.

- Server health monitoring – ensuring servers are functioning as expected and serving up data and results that are fit for transmission.

Performance of online applications

Two things need to be achieved here. First, there needs to be a way of measuring performance. Second, there needs to be an appreciation of, and investment in, the technology that ensures and improves performance.

Testing the performance of applications before they go live can be problematic. Development and test environments are often isolated from the real world, and while user workloads can be simulated to test performance on centralised infrastructure, the real-world network connections users rely on, which are increasingly mobile ones, are harder to test. The availability of public cloud platforms helps, as runtime environments can be simulated, even if the ultimate deployment platform is an internal one. This saves an organisation having to over-invest in its own test infrastructure.

So, upfront testing is all well and good, but ultimately, the user experience needs to be monitored in real time after deployment. This is not just because it is not possible to test all scenarios before deployment, but because the load on an application can change unexpectedly due to rising user demand or other issues, especially over shared networks. User experience monitoring was the subject and title of a 2010 Quocirca report, much of which is still relevant today, but the biggest change since then has been the relentless rise in the number of mobile users.

Examples of tools for the end-to-end monitoring of the user experience, which covers both the application itself and the network impact on it, include CA’s Application Performance Management, Fluke Networks’ Visual Performance Manager, Compuware’s APM and ExtraHop Networks’ specific support for Amazon Web Services (AWS).

It is all well and good being able to monitor and measure performance, but how do you respond when it is not what it should be?

There are two issues here: First, the ability to increase the number of application instances and supporting infrastructure to support the overall workload; and second, the ability to balance that workload between these instances.

Increasing the resources available is far easier than it used to be with the virtualisation of infrastructure in-house and the availability of external infrastructure as a service (IaaS) resources. For many, deployment is now wholly on shared IaaS platforms where increased consumption of resources by a given application is simply extended across the cloud service provider’s infrastructure. This can be achieved because with many customers sharing the same resources, each will have different demands at different times.

Global providers include AWS, Rackspace, Savvis, Dimension Data and Microsoft. There are many local IT service providers (ITSPs) with cloud platforms in the UK, including Attenda, Nui Solutions, Claranet and Pulsant. Some ITSPs partner with one or more global providers to make sure they too have access to a wide range of resources for their customers.

Even those organisations that choose to keep their main deployment on-premises can benefit from the use of “cloud-bursting” – the movement of application workloads to the cloud to support surges in demand – to supplement their in-house resources. In Quocirca’s recent research report, In demand: the culture of online services provision, those organisations providing on demand applications to external users were considerably more likely to recognise the benefits of cloud-bursting than those that did not.

Read more on software quality

In demand: the culture of online services provision, Quocirca 2013

User experience monitoring, Quocirca 2010

Outsourcing the problem of software security, Quocirca 2012

Keep your software healthy during agile development

Being able to measure performance and having access to virtually unlimited resources to respond to it is one thing, but how do you balance the workload across them? The key technologies for achieving this are application delivery controllers (ADCs).

ADCs are basically next-generation load balancers and are proving to be fundamental building blocks for advanced application and network platforms. They enable the flexible scaling of resources as demand rises and/or falls and offload work from the servers themselves.

The best-known ADC supplier was Cisco, but Cisco recently announced it would discontinue further development of its Application Control Engine (ACE) and recommends another leading supplier’s product instead – Citrix’s NetScaler. Other suppliers include F5 – the largest dedicated ADC specialist – Riverbed, Barracuda, A10, Array Networks and Kemp.

So, you can measure performance, you have the resources to meet demand and the means to balance the workload across them, as well as offload some of the work with ADCs – but what about security?

Security of online applications

The first thing to say about the security of online applications is you do not have to do it all yourself. Use of public infrastructure puts the onus on the service provider to ensure security up to a certain level. There are three main approaches to testing and ensuring application security: code and application scanning; manual penetration testing; and a web application firewall.

In code and application scanning, thorough scanning aims to eliminate software flaws. There are two scanning approaches: the static scanning of code or binaries before deployment; and the dynamic scanning of binaries during testing or after deployment. On-premises scanning tools have been relied on in the past – IBM and HP bought two of the main suppliers – but the use of on-demand scanning services, for example from Veracode, has become increasingly popular as the providers of such services have visibility into the tens of thousands of applications scanned on behalf of thousands of customers. Such services are often charged for on a per-application basis, so unlimited scans can be carried out, even on a daily basis. The relatively low cost of on-demand scanning services makes them affordable and scalable for all applications including non-mission critical ones.

The user experience of online applications needs to be monitored in real time after deployment

The second technique is using manual penetration testing (pen-testing). Here, specialist third parties are engaged to test the security of applications and effectiveness of defences. Because actual people are involved in the process, pen-testing is relatively expensive and only carried out periodically, so new threats may emerge between tests. Most organisations will find pen-testing unaffordable for all deployed software and is generally reserved for the most sensitive and vulnerable applications.

The third layer in web application security is to deploy a web application firewall (WAF). These are placed in front of applications to protect that from application-focused threats. They are more complex to deploy than traditional network firewalls and while affording good protection do nothing to fix the underlying flaws in software. WAFs also need to scale with traffic volumes, as more traffic means more cost. WAFs are a feature of many ADCs, and are less likely to be deployed as separate products than they were in the past. They also protect against application-level DoS where scanning and pen-testing cannot.

Complete 100% software security is never going to be guaranteed, and many organisations use multiple approaches to maximise protection. However, as one of the reasons for having demonstrable software security is to satisfy auditors, compliance bodies do not themselves mandate multiple approaches. For example, the Payment Card Industry Security Standards Council (PCI-SSC) deems code scanning to be an acceptable alternative to a WAF.