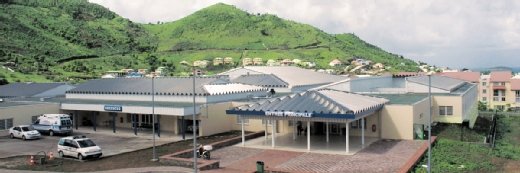

Caribbean island hospital rebuilds after hurricane with DataCore

Hurricane Irma tore the roof off a server room and flooded IT hardware, but that was an opportunity to rebuild redundant IT using DataCore’s SANsymphony software-defined storage

The night of 5 September 2017 was a night to remember for the Caribbean island of Saint Martin. Devastated by Hurricane Irma, the only hospital in the French-administered part of the island had its roof ripped off and servers, storage and backup appliances drenched in rain and sea water.

Fortunately, the hospital had a second server room that replicated the primary infrastructure to ensure activities could continue if disaster struck. The big problem was, however, that it would take a year to rebuild the destroyed infrastructure and get back to normal IT functioning.

Why so long? Because products equivalent to those installed had evolved significantly. Those that had been deployed were no longer available and the ability to synchronise the two sites was not guaranteed. Ultimately, the answer lays in software-defined storage, which allowed the disparate hardware to be united.

“To have two server rooms in active-active mode has been a government requirement since 2013 under the electronic patient records framework,” said Jean-François Desrumaux, IT project chief at the Centre Hospitalier de Saint Martin.

“So, since we only had one, all datacentre development was frozen. We maintained the medical and administrative applications that we had, but we weren’t able to risk installing new ones because we wouldn’t be able to switch to a backup infrastructure in case of a problem.”

Ultimately, Desrumaux’s team restored the hospital’s active-active provision by deploying DataCore’s SANsymphony virtual storage infrastructure.

Active-active storage arrays

Going back to 2014, the IT infrastructure at the Centre Hospitalier de Saint Martin comprised a 10Gbps core network which distributed application workloads between two identical server rooms. In each of these were two VMware ESXi servers built on IBM x3650M4 hardware, with eight cores of Xeon 2.4GHz E5, 128GB of RAM, two128GB flash drives and two 8Gbps Fibre Channel ports.

Each of these ports was connected to one of two Fibre Channel switches that shared connections to an IBM StorWize V3700 array, which offered 10TB of storage capacity, and to DataCore SANsymphony software-defined storage that was deployed on an IBM x3250M4. The latter functioned to serve storage volumes to the two ESXi servers.

Between the two server rooms, redundancy was assured at two levels.

Firstly, there were the virtual servers that operated under vSphere HA and DRS, and which communicated via the 10Gbps Ethernet network to ensure they ran the same set of virtual machines (VMs).

Secondly, a dedicated Fibre Channel connection between the two rooms allowed the two StorWize arrays to synchronise their contents in real time using IBM Global Mirror.

So, although there was 20TB across the two rooms, it was split into 10TB in each.

Added to all this was a backup system in one room only. Dataclone backup software (from Matrix) looked after the contents of the VMs with a 6TB NAS as a target, also from Matrix. Unfortunately, this resided in the room destroyed by the hurricane.

IBM servers restart after the flood

About the end of the third day after the hurricane had passed, electricity was restored and Desrumaux’s team switched the IT system back on.

Because the second server room no longer existed, the decision was taken to boost the memory on the two saved ESXi servers to 384GB.

“Tripling the quantity of RAM appeared to us to be a satisfactory solution to maintain the fluid running of applications,” said Desrumaux. “It meant we didn’t need to augment processing power.”

Read more on storage virtualisation

- We look at the pros and cons of software-defined storage and weigh up when it’s a better option than buying NAS and SAN pre-built hardware shared storage arrays.

- Global roll-out sees DataCore software-defined storage deployed on Huawei hardware to HQ and 14 worldwide sites as aero parts maker copes with acquisition and expansion.

With these two servers being the only remaining ones keeping applications going, the IT project chief was not inclined to disturb them. So, there was no question of taking them down to replace them with better-performing hardware if it meant weeks of no availability.

Meanwhile, the two servers that took a drenching were restarted.

“There was no question of putting them back into production to run applications, because we couldn’t be certain of their reliability,” said Desrumaux. “Because we didn’t have any redundancy, we had the idea, to give minimum protection, to put these two servers in a room and to use them as emergency disaster recovery equipment.

“So, we gave each of them 5TB of disk from the storage arrays and switched on replication – daily and asynchronous – to protect the contents of the production ESXi servers.”

DataCore connects across arrays

Replacing the Matrix appliance in the rebuilt server room didn’t pose a problem because it worked relatively independently of the rest of the infrastructure.

The challenge came in procuring infrastructure that could be synchronised between the two rooms. That was in 2017 and the products proposed by IBM were very different from those that had been deployed in 2014.

For example, StorWize arrays were now designated V3700v2 with 12Gbps SAS drives and had a new OS, while those in production at the hospital had 6Gbps drives.

The idea was hit upon, therefore, to effect synchronisation between the two disk arrays at the level of the DataCore SANsymphony software-defined storage. This provided the capacity to pool capacity from different arrays and present it as a single logical volume to servers.

SANsymphony presents a single volume of 10TB to servers, with 20TB planned, because Desrumaux plans to double storage capacity to support new applications.

Validating this setup lasted from December 2017 to November 2018. The added difficulty to resolve was that the future second server room still had its own SANsymphony server. This presented a problem similar to that of the storage arrays. That is, the physical machine on which it ran was built of different hardware, a Lenovo x3250M6, which IBM started to re-sell from its Chinese partner at the end of 2014.

Operational in a day

Once tests had been done and settings calibrated, the deployment of the new second server room took just one day. That was the time needed for the first SANsymphony server to replicate 8TB to the new storage system.

From the administrative point of view, it would have been sufficient to redefine the connections between all the servers and disk arrays across the two rooms. To make things simpler, the extra 10TB were configured as a new LUN. All VMs from the news applications were configured from the ESXi interface.

And so, since December 2018, the Centre Hospitalier de Saint Martin has benefited from two server rooms in active-active mode and no major technical problem has occurred. Desrumaux doesn’t plan for another major refresh before 2022.