The ‘core’ of data science: McKinsey donates Kedro to Linux

Scottish-sounding management consultancy McKinsey has donated Kedro to the Linux Foundation.

Build back in 2019, McKinsey launched Kedro as an open source software tool on GitHub for data scientists and data engineers.

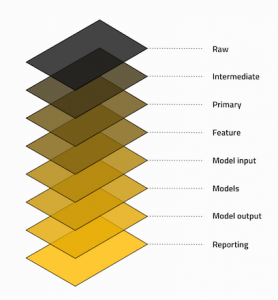

In terms of form and function, this technology is a library of code that can be used to create data and Machine Learning pipelines.

Cor, it’s core

The name Kedro derives from the Greek word meaning centre or core.

The Kedro community now has a user base drawn from some 200,000 monthly downloads and over 100 contributors.

In terms of those organisations willing to adopt Kedro as their standard for data science code, to model air traffic patterns and Telkomsel (Indonesia’s largest wireless network provider) uses Kedro as a standard across its data science organisation.

Kedro will sit in the Linux Foundation’s LF AI & Data area, a specialist umbrella foundation founded in 2018 to support and accelerate development and innovation in Artificial Intelligence (AI) and data by supporting and connecting technical open source projects.

“We’re excited to welcome the Kedro project into LF AI & Data. It addresses the many challenges that exist in creating machine learning products today and it is a fantastic complement to our portfolio of hosted technical projects. We look forward to working with the community to grow the project’s footprint and to create new collaboration opportunities with our members, hosted projects and the larger open-source community,” says Dr. Ibrahim Haddad, executive director of LF AI & Data.

Yetunde Dada, product lead on Kedro has said that Kedro is now in the hands of the data science ecosystem – and that this is the only way it can grow at this point i.e. if it is improved by the best people around the world.

Yetunde Dada, product lead on Kedro has said that Kedro is now in the hands of the data science ecosystem – and that this is the only way it can grow at this point i.e. if it is improved by the best people around the world.

So you think you’re industry standard?

This is new ground for McKinsey, the company has always protected its intellectual property… so what qualifies Kedro as a de-facto ‘industry standard’ as it claimed to be.

Ivan Danov, Kedro technical lead says that this is a framework that borrows concepts from software engineering best practices and brings them to the data science world. It lays all the groundwork for taking a project from an idea to a finished product, allowing developers and engineers to focus entirely on solving the business problem at hand.

Kedro was actively developed before being open-sourced and will continue to be the foundation of all advanced analytics projects within McKinsey.

Scar tissue, share with the birds

“We’ve been building ML products for a long time and on that journey, we’ve accrued a fair amount of scar tissue and learned a lot of important lessons. The ideas and guardrails that exist in Kedro are a reflection of that experience and are designed to help developers avoid common pitfalls and follow best practices,” shares Joel Schwarzmann, product manager.

Now its future can be steered by much wider range of stakeholders, across different industries, geographies and technologies, who bring different perspectives, and can apply Kedro to many more use cases.

An extended team of ‘maintainers’, including McKinsey’s own developers, can contribute to Kedro’s development: writing code, shaping product strategies, tracking use cases and voting on decisions that affect the project.