Kubernetes networking evolves: Cilium joins CNCF as an incubator

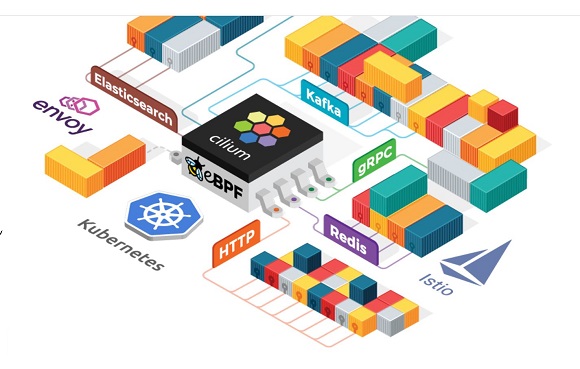

Cilium (pronounced ‘see-lee-um’) provides networking, security and observability for cloud native environments by acting as a CNI (Container Network Interface) and enhanced networking layer for Kubernetes using eBPF (extended Berkley Packet Filter).

The CNCF Technical Oversight Committee (TOC) has now voted to accept Cilium as a CNCF incubating project.

As many readers will know, eBPF is a technology with origins in the Linux kernel that can run sandboxed programs in an Operating System (OS) kernel while simultaneously extending the capabilities of the kernel without requiring to change kernel source code or load kernel modules.

“Cilium and eBPF are changing the networking and security landscape, with the majority of big cloud providers now relying on Cilium,” said Thomas Graf, co-creator of Cilium.

Graf says he is happy that Cilium has a new home under the auspices of the CNCF and notes how many organisation’s cloud instances and hyperscalersuse Cilium for its ‘deep’ security, performance, scalability and observability.

Cilium is used across the Kubernetes ecosystem by organizations like Adobe, Capital One, Cognite, Datadog, GitLab, Palantir, SAP Concur, Telenor, Trip.com, Wildlife Studios, Yahoo, and many more.

In addition, cloud providers such as Alibaba, AWS, DigitalOcean and Google Cloud use Cilium as the CNI plugin of choice for managed cloud and on-premises Kubernetes platforms.

Google itself has said that eBPF is a networking paradigm that exposes programmable hooks to the network stack inside the Linux kernel. “The ability to enrich the kernel with user-space information – without jumping back and forth between user and kernel spaces – enables context-aware operations on network packets at high speeds,” said Google, in a developer blog.

Envoy & Prometheus

Cilium is tightly integrated with Envoy (a high performance C++ distributed proxy designed for single services and cloud native applications) and Prometheus (an event monitoring and alerting project) and provides an extension framework based on Go.

“Cilium is a critical part of the Datadog network stack as it provides consistent Kubernetes networking across cloud providers as well as performant and secure communications thanks to eBPF,” said Laurent Bernaille, staff engineer at Datadog.

The Cilium project consists of multiple components and layers which can be used independently of each other. This allows users to pick a particular functionality or to run Cilium in combination with other CNIs.

Agent: The agent runs on all Kubernetes worker nodes and other servers hosting workloads. It provides the core eBPF platform and is the foundation for all other Cilium components.

Network Plugins (CNI): The CNI plugin enables organizations to use Cilium to provide networking for Kubernetes clusters and other orchestration systems which rely on the CNI specification.

Hubble: Hubble is the observability component of Cilium. It provides network and security logs, metrics, tracing data, and several graphical user interfaces.

ClusterMesh: ClusterMesh implements a network or service mesh that can span multiple clusters and external workloads running on external virtual machines or bare-metal servers. It provides connectivity, service discovery, network security, and observability across clusters and workloads.

Load Balancer: The load balancer is capable of running in the cluster to implement Kubernetes services and standalone to provide north-south load-balancing in front of Kubernetes clusters.

“eBPF allows programs in the kernel to run without kernel modules or modifications,” said Chris Aniszczyk, CTO of CNCF. “It is enabling a new generation of software to extend the behavior of the kernel. In the case of Cilium, it provides sidecar less high-performance networking, advanced load balancing, and more. We’re excited to welcome more eBPF-based projects into the cloud native ecosystem and look forward to watching Cilium help grow the eBPF ecosystem.”

As a CNCF incubating project, Cilium has planned a full roadmap and is actively adding new features and functionality.

Future plans

The team will be adding new service mesh functionality, including support for the OpenTelemetry project and L7 load balancing controls, building on the existing Envoy Proxy integration.

The team will also incorporate additional features for on-premises deployments, including advanced IPAM (IP Address Management) modes, multi-homing, service changing, and enhanced support for external workloads. Finally, the team will focus on evolving security capabilities by adding further identity integrations, deeper workload visibility, and continuing to focus on identity-based enforcement.