Diving into Open Data Lakes Analytics

Let’s take a quick dip into Open Data Lakes Analytics.

But before we do, we need to cross off some names and definitions. This story starts with Ahana, a self-styled ‘self-service’ analytics company for Presto.

Presto, for those that need reminding, an in-memory distributed SQL engine that is faster than other compute engines in the disaggregated stack. It is federated and can query relational & NoSQL databases, data warehouses, data lakes and more besides.

We said data lakes, that mass of uncharted unassembled unstructured data that has yet to be parsed and partitioned or managed and mollycoddled through the initial stages of deduplication and deliverance that it will need to experience if it is every to be useful for real world analytics.

So from data lakes to open data lake analytics then… this is how the story develops.

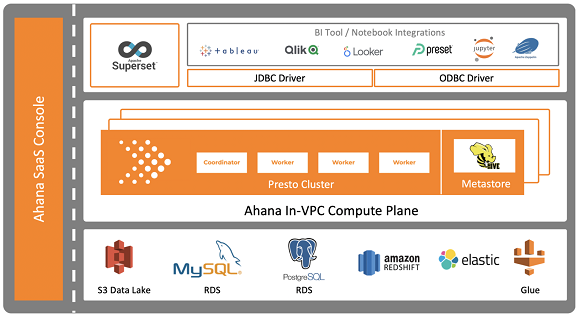

Ahana late last year announced Ahana Cloud for Presto, a first cloud-native managed service focused on Presto on Amazon Web Services (AWS).

Additionally, Ahana announced a go-to-market solution in collaboration with Intel via its participation in the Intel Disruptor Program to offer an Open Data Lake Analytics Accelerator Package for Ahana Cloud users that leverages Intel Optane on the cloud with AWS.

What is Open Data Lake Analytics?

According to Ahana, an Open Data Lake Analytics approach is a technology stack that includes open source, open formats, open interfaces and open cloud, a preferred approach for companies that want to avoid proprietary formats and technology lock-in that come with traditional data warehouses.

“The architecture consists of a loosely coupled disaggregated stack that enables querying across many databases and data lakes, without having to move any of the data. Presto is used to query data directly on a data lake without the need for transformation; your data can be queried in place. You can query any type of data in your data lake, including both structured and unstructured data,” notes Ahana, in an explanatory statement.

Knowing me, knowing you – Ahana!

Cofounder and chief product officer at Ahana Dipti Borkar says that by abstracting away the complexities of deployment, configuration and management, platform teams can now deploy ‘self-service’ Presto for open data lake analytics as well as analytics on a range of other data sources.

Qubole (an Open Data Lake platform company) writes more on this and says that an open data lake ingests data from sources such as applications, databases, data warehouses and real-time streams.

According to Qubole, “An Open Data Lake supports both the pull and push based ingestion of data. It supports pull-based ingestion through batch data pipelines and push-based ingestion through stream processing. For both these types of data ingestion, an Open Data Lake supports open standards such as SQL and Apache Spark for authoring data transformations. For batch data pipelines, it supports row-level inserts and updates — UPSERT — to datasets in the lake.”

The company says that it then formats and stores the data into an open data format, such as ORC and Parquet, that is platform-independent, machine-readable, optimised for fast access and analytics, and made available to consumers without restrictions that would impede the re-use of that information.

Apache Parquet is a columnar storage format available to any project in the Hadoop ecosystem, regardless of the choice of data processing framework, data model or programming language.

There’s more to learn here, we will return for a second dip in this lake.

Approved image source: Ahana