The death of the data model?

Agile developers tend to view data modeling as a bottleneck preventing them from delivering value.

This is the view held by Pascal Desmarets, founder and CEO of Hackolade, a Belgium-based startup that focuses on the importance of proper schema design in microservices architectures.

As we know from TechTarget definitions, data modeling is the process of documenting a complex software system design as an easily understood diagram, using text and symbols to represent the way data needs to flow — the diagram can be used to ensure efficient use of data, as a blueprint for the construction of new software or for re-engineering a legacy application.

Desmarets insists that enterprise data models, though a worthy ambition, take too long to develop and are difficult to keep up-to-date.

Model reinvention

He says that his firm proposes a new methodology to reinvent data modeling and make it relevant again in a developer-driven environment.

“Developers are correct in arguing that data modeling is not an end in itself. But a data model representing an abstraction of the requirements for information systems, becomes very useful if it leads to [a] good schema design. The schema acts as a contract between applications and becomes the authoritative source of the context structure and meaning, leading to higher data quality and better coherence throughout. This is critical to making sense of the huge quantity of data being accumulated and exchanged to feed Machine Learning, AI and BI,” said Desmarets

He reminds us that some have advocated a ‘code-first’ approach and embraced ‘schema-less’ databases.

But, says Desmarets, the typical lifespan of applications is much shorter than the lifespan for data — and storing unstructured data is not an end in itself either.

It appears that many companies realise that data quality and data consistency are higher with a ‘design-first’ approach, as long as the process remains Agile while using the dynamic schema nature of NoSQL databases.

“For data modeling to be Agile, a number of traditional techniques need to evolve. In particular, conceptual modeling should be replaced by Domain-Driven Design, a software development approach created by Eric Evans. DDD enables teams to focus on what is core to the success of the business, while tackling complexity in software design with a collection of patterns, principles and practices,” said Desmarets.

He thinks that while conceptual and logical modeling were well adapted to waterfall development and the rules of normalisation used in relational databases, Domain-Driven Design is better suited to aggregates and denormalisation used in APIs and NoSQL databases.

Bypass logical modeling

It is also, argues Desmarets, an opportunity to bypass the logical modeling step, which is no longer needed since DDD concepts map directly to NoSQL data structures.

“By combining Domain-Driven Design with pragmatic process flowcharting, plus wireframing of application screens and reports, analysts and designers can easily apply a query-driven approach to application-specific dynamic schema design,” said Desmarets.

So it appears, if what Desmarets is suggesting holds water, that the nature of data modeling is changing in some places.

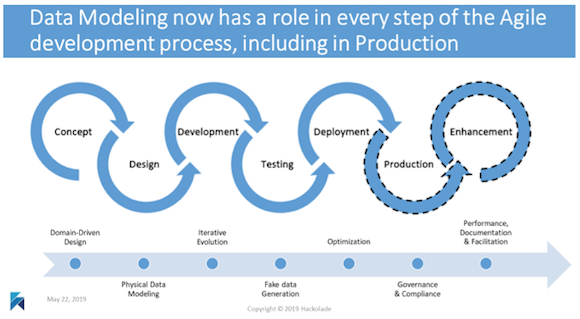

The lifecycle used to be heavily front-loaded in a serial process. Today, data modeling takes place before every Agile sprint… and throughout the lifetime of an application. That’s this year’s model for data modeling.

Image: Hackolade