Run:ai goes full-stack hyper-optimised AI on Nvidia DGX

Tech firms spell their names in creative ways.

Not content with the dot.something style now common to many, Tel Aviv based compute orchestration for AI workload company Run:ai almost went full scope resolution operator and has added a colon.

Squaring up to a company known for its work GPU-centric high-performance computing and insistence upon capitalisation, Run:ai has now launched Run:ai MLOps Compute Platform (MCP) powered by Nvidia DGX Systems.

This is meant to be a full-stack AI solution for enterprises.

Nvidia DGX is a line of servers and workstations which specialise in using GPGPU (General-Purpose computing on Graphics Processing Units) to accelerate deep-learning applications – and Run:ai has used this technology to run its Atlas software.

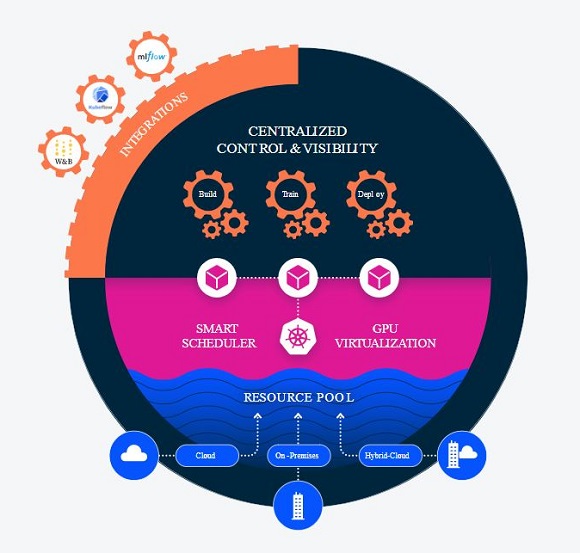

Run:ai MCP is an end-to-end AI infrastructure platform that orchestrates the hardware and software complexities of AI development and deployment.

As AI adoption grows, each level of the AI stack, from hardware to high-level software can create challenges and inefficiencies, with multiple teams competing for the same limited GPU computing time.

This can give rise to so-called ‘shadow AI’, where individual teams buy their own infrastructure.

This decentralised approach leads to idle resources, duplication, increased expense and delayed time to market. Run:ai says MCP is designed to overcome these potential roadblocks to successful AI deployments.

“Enterprises are investing in data science to deliver on the promise of AI, but they lack a single, end-to-end AI infrastructure to ensure access to the resources their practitioners need to succeed,” said Omri Geller, co-founder and CEO of Run:ai. “This is an AI solution that unifies our AI workload orchestration with Nvidia DGX systems — to deliver compute density, performance and flexibility. Our early design partners have achieved [positive] results with MCP, including a 200-500% improved utilisation and ROI on their GPUs, which demonstrates the power of this solution to address the biggest bottlenecks in the development of AI.”

According to Nvidia’s vice president of global AI datacentre solutions Matt Hull, as an integrated solution featuring Nvidia’s DGX systems and the Run:ai software stack, Run:ai MCP makes it easier for enterprises to add the infrastructure needed to scale their success.”

Run:ai MCP powered by Nvidia’s DGX systems with Nvidia Base Command (a platform that includes enterprise-grade orchestration and cluster management, libraries that accelerate compute, storage and network infrastructure and system software optimised for running AI workloads) is a full-stack AI solution that can be obtained from distributors and installed with enterprise support, including direct access to experts from both companies.

Self-service access

With MCP, compute resources are gathered into a centralised pool that can be managed and provisioned by one team, but delivered to many users with self-service access.

A cloud-native operating system helps IT manage everything from fractions of Nvidia GPUs to large-scale distributed training. Run:ai’s workload-aware orchestration ensures that every type of AI workload gets the right amount of compute resources when needed.

The solution provides MLOps tools while preserving freedom for developers to use their preferred tools via integrations with Kubeflow, Airflow, MLflow etc.

Run:ai — it runs, well, AI.