Modern development - Sumo Logic: atomized applications demand upfront observability

This series is devoted to examining the leading trends that go towards defining the shape of modern software application development.

As we have initially discussed here, with so many new platform-level changes now playing out across the technology landscape, how should we think about the cloud-native, open-compliant, mobile-first, Agile-enriched, AI-fuelled, bot-filled world of coding and how do these forces now come together to create the new world of modern programming?

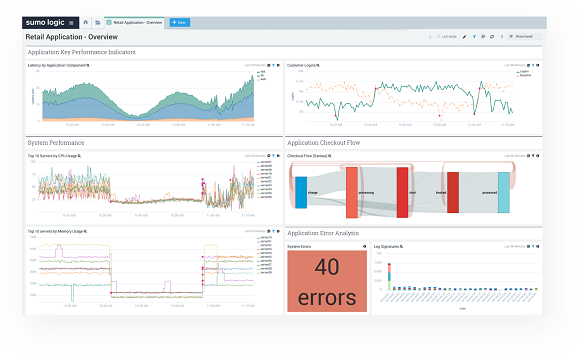

This piece is written by Ben Newton in his capacity as director of operational analytics at Sumo Logic — the company is known for it’s ‘continuous intelligence’ approach to machine data metrics and logs for cloud operations management, which enables customers to carry out analytics and detect potential attacks on its infrastructure.

Newton writes as follows…

The ‘atomization’ of applications

Modern applications get built to meet the needs of today’s customers.

We have more people relying on applications or online services to do what they want to do – in response, we have broken down our applications to make them easier to update and change as needed. Using a microservices-based approach, we have ‘atomized’ our applications and rebuilt them so we can make changes really quickly… and those changes don’t take down the whole system.

From that perspective, particularly when considering customer needs, this is a huge boon. If something is not performing well, we can see the issue and make changes. This makes it easier to help customers and provide that positive experience that customers expect today.

But from a back-end perspective, the process is not as simple. Rather than a few elements of an application to consider, we can now have applications that run across multiple cloud platforms, internal and third-party services and different delivery mechanisms and various devices. Each of those application components, in turn, generates data (and metadata) on performance and actions.

The number of components can run to hundreds or thousands, each providing their own sources of machine data, such as log files, alongside metrics and tracing data on user transactions. Each of the services can scale based on the volume of customer inquiries that are coming through, creating more or less data based on that usage. All this data can and should be available to developers for them to use as part of their working practices.

However – while customers get a better experience when we make this change – as developers we face a more complex problem when it comes to using this data. Rather like a lumpy pillow, we have moved the problem around for ourselves rather than fixing it completely and finding issues when something goes wrong is more challenging if we do not plan in advance.

Upfront observability

Using the combination of logs, metrics, and tracing data for observability will help in this process. However, having this data is not enough on its own.

Thinking about observability upfront can help us to define what we expect to see and what we want to achieve. There are lessons for all developers in Google’s Site Reliability Engineering books on how to design and develop systems that are sustainable and able to cope with outages, for example. We can look for the issues that can have the most potential impact on customers and reverse engineer how to track and trace those problems in advance.

There’s an element of reverse engineering here too – by starting our development process with an objective in mind, we can look at how that objective is reflected in the software we create and then manage.

For example, an eCommerce application may live or die on its performance. Should we, therefore, have a blanket availability objective in place, or a more customer-specific one for shopping basket checkouts at busy times of the day? By putting an objective in place – say, sub-one second checkout times during high customer traffic periods – we can define how well we are doing and whether our changes are effective.

This approach to data can provide developers with more insight into how their changes are affecting performance over time. From finding faults more efficiently, we can look at how to prevent issues before they affect customers, and we can look at the wider business impact that those changes have too.

Data, but in context

It is worth spending more time on how we define issues like reliability around software using data. Taking our observability data and looking at it continuously can help show where things are improving before and after a change. However, this process can only take place when we understand our data in the right context… and when we as developers can use that data effectively within the business.

Solely looking at observability data will only solve development problems after the fact.

We might be able to fix problems faster than before, but we will remain in that silo. Instead, we should encourage the whole development process to look at larger issues, like reliability and performance. Already, we can point to the impact that or changes have. Should we not look at making recommendations too, so that we can continue that process of improvement?

Image source: Sumo Logic