Domino ‘fall’ release topples challenges across AI assembly, scale & governance

Domino Data Lab is a specialist provider of enterprise AI platform technologies.

The company has used its “fall” (not a Dominoes game-related pun, it just means autumn in this sense) release and integrations to provide more options for highly regulated enterprises to work with AI and data under demanding policy compliance regulations.

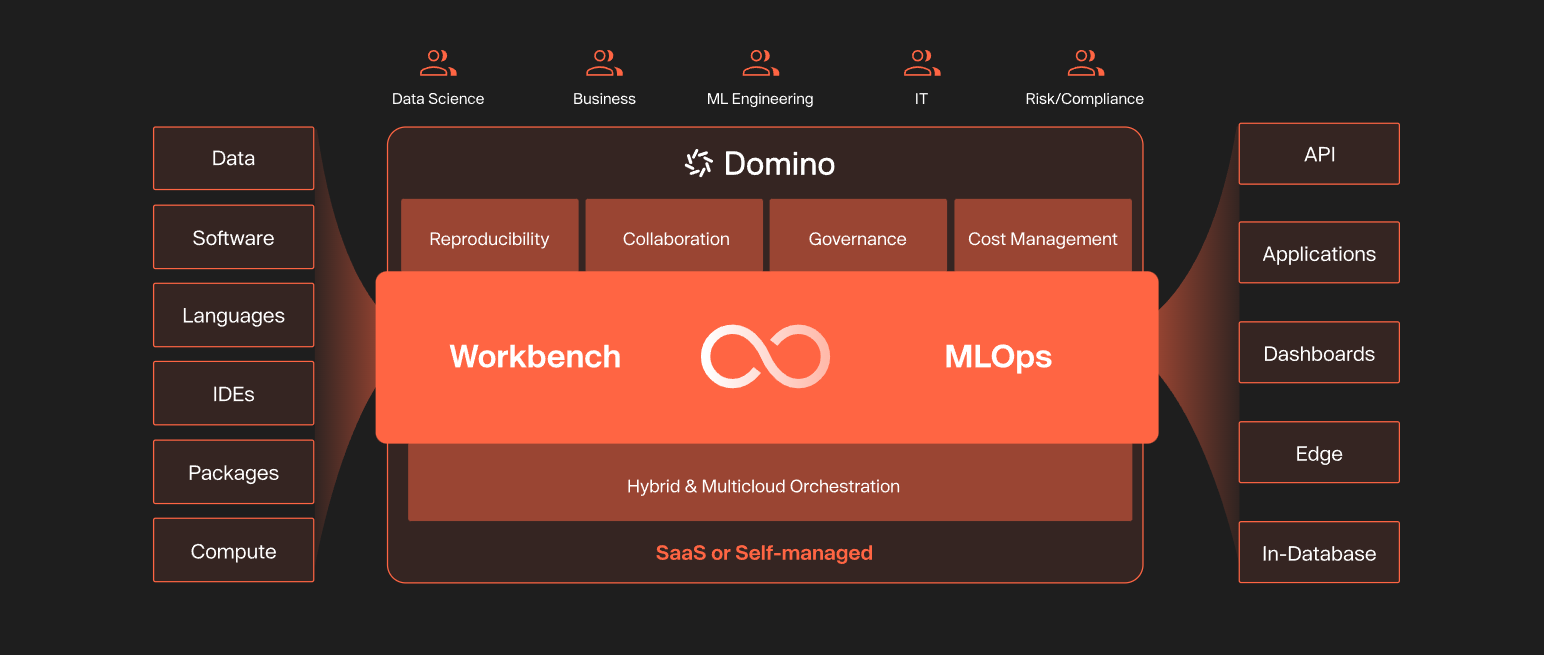

The firm has announced new platform capabilities and integrations with Amazon Web Services (AWS), NetApp and capitalisation-focused GPU specialist Nvidia that take the form of a set of single-platform capabilities across data, development and deployment functions for AI workloads in the cloud or on-premises.

Enterprise AI initiatives require diverse technologies, obviously.

They need unprecedented data and compute power. They also need coordination across AI, IT and risk teams.

AI lifecycle coordination

Domino says that the “bespoke coordination of these resources” along the AI lifecycle can increase time-to-value, exacerbate costs and create risk. The company suggests that to regain momentum and control, enterprises need one place to orchestrate resources, technology and people across the lifecycle.

“The pace of AI innovation has brought immense complexity for enterprises needing to orchestrate diverse tools and infrastructure,” said Nick Elprin, CEO and co-founder at Domino. “Domino’s latest capabilities simplify this process, enabling organisations to manage and scale AI initiatives seamlessly across any environment.”

Domino says it can further reduce AI time-to-value by streamlining the model lifecycle from experimentation to production with new project templates and support for Nvidia NIM microservices, part of the upper-case-centric organisation’s Nvidia AI Enterprise software platform.

With support for lowercase Nvidia NIM microservices, the platform allows enterprises to move generative AI proofs of concept into production across hybrid clouds.

Open source pre-trained models

Domino’s support for NIM microservices enables teams to innovate using dozens of open source pre-trained models such as Llama 3.1 and Mistral NeMo 12B – all with built-in governance, secure access and enhanced performance through Nvidia AI Enterprise.

“Domino’s new Project Templates allow enterprises to define and share AI project best practices – including code, datasets, environments and configurations. These templates increase the velocity, quality, and predictability of data science and AI projects. In collaboration with AWS and NetApp, Domino is introducing new features to help enterprises scale AI deployments efficiently while reducing costs,” notes the company, i a press statement.

Enterprises can now choose to deploy models, including large language models (LLMs), to Amazon SageMaker for high performance and cost efficiency, while maintaining visibility and governance in Domino. This delivers enterprises more options, across on-premises and hybrid cloud environments, for scaling key AI lifecycle stages such as inference.

Domino says it has expanded its accelerated compute options with support for AWS Trainium and AWS Inferentia chips for cost-efficient training and inference workloads.

No DevOps overhead

Domino Volumes for NetApp ONTAP, the latest product of a collaboration announced in October, allows enterprises using NetApp ONTAP and Domino to access their NetApp data anywhere across any environment, without DevOps overhead.

“NetApp and Domino both fundamentally believe that AI will be driven by the hybrid cloud to turbocharge AI workflows at scale and on budget,” said Jonsi Stefansson, senior vice president and chief technology officer at NetApp. “NetApp’s innovations in intelligent data infrastructure are bringing AI to our customers’ data, wherever and however they need it. By collaborating with NetApp, Domino is giving data scientists and data engineers the ability to secure, unify and scale their data operations alongside enhanced AI tooling to achieve their AI goals.”

In addition here, Domino’s says that its new capabilities help enterprises mitigate risks while ensuring the progress of AI initiatives. Domino Volumes for NetApp ONTAP also combines Domino’s traceability and reproducibility with the protection provided by NetApp ONTAP.

Mike Leone, practice director and principal analyst at Enterprise Strategy Group says that by collaborating with NetApp and integrating its data solutions into Domino’s platform, Domino is enhancing its position in the enterprise AI market, while enabling NetApp to advance its own AI ecosystem.

Mike Leone, practice director and principal analyst at Enterprise Strategy Group says that by collaborating with NetApp and integrating its data solutions into Domino’s platform, Domino is enhancing its position in the enterprise AI market, while enabling NetApp to advance its own AI ecosystem.

He thinks that in highly regulated industries like biopharmaceuticals, where AI governance is crucial for speed and safety, this can enable users to accelerate AI deployments at enterprise scale.

Introduced in 2024 Domino Governance automates and orchestrates the collection, review, and tracing of materials required to ensure enterprise compliance with internal and external policies, mitigating risks and driving more value.

Domino’s Fall Release, including model deployment to Amazon SageMaker, Project Templates and support for NVIDIA NIM microservices, is generally available now. Domino Volumes for NetApp ONTAP will be available in Domino Cloud in Q1 2025.

Domino enterprise AI platform: a unified platform to orchestrate and scale AI.