DataStax HQ Study Tour 2023

It’s always good to speak to real software engineers inside the actual organisations that they work within; as such, getting away from the formalised staged sessions that make up the annual technology conference roster always feels like a positive thing.

Engaging in what these days is increasingly known as a ‘study tour’ opportunity is an insightful exercise.

Real-time AI and cloud vector database company DataStax with its foundations built on Apache Cassandra opened its doors to the Computer Weekly Developer Network team this month to deconstruct, clarify and discuss its core technology proposition.

Starting with Chet Kapoor, chairman & CEO of DataStax, we got the big picture on where DataStax is developing – and specifically, how the Astra database is now providing tools for software application development professionals building generative AI applications and services… and exactly what that means for the business world at large.

Addressing the question of whether it is people vs. AI (in terms of the idea that AI models will take away peoples’ jobs), Kapoor offers a more practical suggestion and says that it’s not people vs. AI, it’s people vs, people with AI i.e. our new intelligence engines will bypass those people who fail to embrace them and they will stand contra to people who work with AI, more effectively and efficiently.

JSON API for Astra DB

Effortless, affable and engagingly informal in his delivery, CEO Kapoor also pointed to key product developments for DataStax this month which currently gravitate around its new JSON (JavaScript Object Notation) API for the company’s own Astra DB database-as-a-service (DBaaS), which is built on Apache Cassandra.

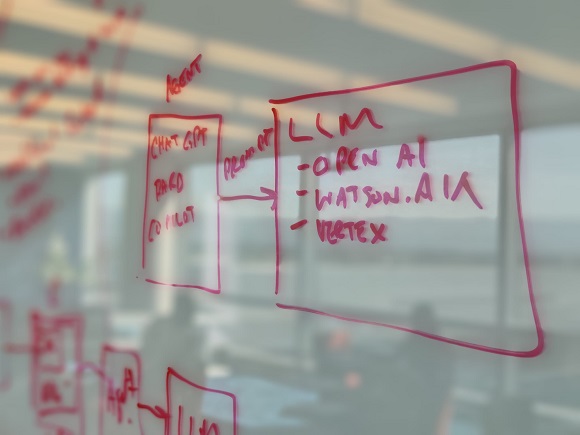

Software developers working alongside (and increasingly now ahead of) data scientists on the creation of vector databases with Large Language Models (LLMs) being used to construct generative AI functions will now be offered this technology (via an open source data API gateway known as Stargate) as a route to create AI applications with vector search capabilities is to use the new Astra DB JSON API.

Moving out of the CEO’s corner office to a classroom environment overlooking the expanses of Silicon Valley, DataStax product manager Chris Latimer provided a lesson designed to deliver an overview of the relationships between LLMs, software agents, control layers including RAG (retrieval augmented generation – an AI framework for improving the quality of LLM-responses by grounding the model on external sources and the User Interface (UI) – in doing so, he also positioned these incremental functions in terms of their relationship with data repositories and the data layer itself.

Latimer covered how DataStax Astra DB works as a platform and the services that it delivers for customers around generative AI. This technology will provide an opportunity to review the challenges that developers have around building generative AI applications, how they want to work around applications and new service deployments and how DataStax’s Astra DB meets those requirements. This work can in fact fit into the more broad requirements that developers have around cloud-native applications, services and delivery for their organisations.

“We are super-focused on gen AI and our vector database (as an enabling technology for generative AI applications) right now. But as we talk to customers, we find that we uncover new operational insights all the time. When they are making calls to openAI and LLM models, that works okay for structured transactional data – but for unstructured knowledge stores that might be inside an organisation, it’s a lot harder for the model to understand context… so this is why the need for semantic search (in products such as Astra DB) are able to really come to the fore as a means of handling these requirements,” said Latimer.

If open models have been trained on protocols that exist inside a business, the semantic models and inference are not there, so this encompasses many of the functionalities that DataStax is building.

“With vector search, Astra DB is able to understand the semantic meaning of a word (‘original’ could mean first, or genuine or creative for example), so we are storing bigger and bigger vectors by working with organisations like openAI, HuggingFace and Google’s Vertex AI to be able to build these technologies. It’s not larger vectors, but larger numbers of vectors and being able to provide meta-level filtering to do an initial narrowing on vectors in order to be able to use the best available items for any given task,” explained Latimer.

Latency-sensitive systems of engagement

With the need to now build real-time AI and the need to work with LLMs to get comprehensive answers quickly, Latimer says that often we want to denormalise data and take a cache of data, but this means using an outdated data store. By taking an event-driven approach to updating that cache when events happen, they can be brought into the data-streaming platform that exists in the DataStax Astra platform itself. It’s all about latency-sensitive systems of engagement that need to be reengineered for new and modern use cases with gen AI behind them.

The road here leads us on a journey towards ‘prompt engineering’ i.e. the process we use to define the way we want the LLM to interact with users based upon the types of requests that they will naturally come forward with in terms of their daily use of apps… and this leads us onwards to use of RAG to provide additional contextual insight.

Moving to speak to Bryan Kirschner, vice president of strategy, DataStax, we were given a chance to follow up on CEO Kapoor’s conversation at the beginning of the day around company strategies and linking them to generative AI deployments. Kirschner also covered the company’s data on AI and results (based on a survey with enterprise organisations) of how real-world business decisions around generative AI are made.

All technically robust enough so far then, but how does DataStax communicate its messages to users and developers, partners and practitioners and outwards to the wider market at large?

This is where chief marketing officer (CMO) Jason McClelland comes in. Having worked in key marcoms roles at a number of enterprise technology organisations, McClelland’s grounding in reality perhaps comes from the fact that he started life as a truck driver working for Sun Microsystems (where he taught himself Java by taking books from the office home at night). He actually ended up getting a job at a startup and worked his way up through technology support and more to join the C-suite where he stands today.

“In our business, it’s all about awareness and demand generation, so the process is focused on telling people what DataStax is, clarifying how the brand provides value and then making them want it. We have built tremendous momentum around our vector database as every developer up to the enterprise leader is looking to leverage generative AI within their business. Our goal now is to continue to deliver value to our users as they find new and different ways to build with gen AI. Our focus right now is helping customers leverage their proprietary data to augment LLMs in an impactful, safe, and scalable way via processes like retrieval augmented generation,” said McClelland.

All rise for Doctor Charna

Rounding out the day’s sessions with some deep insight into the real working structure of AI models and the way they are applied to the coal face of software application development today, we moved into a session hosted and presented by Dr.Charna Parkey in her capacity as real-time AI product & strategy leader.

Parkey’s presentation was initially designed to explain how DataStax approaches real-time generative AI problems with its open source projects. Alongside JVector, Parkey covered LangStream and CassIO, two projects created by DataStax and launched as open source for the community.

LangStream helps developers bring real-time data into their generative AI applications. By launching elements of the data pipeline using event data, developers can ensure their applications use the latest data in their services rather than relying on stale data. LangStream also allows developers to create and scale applications created in LangChain, by running each task as a microservice that can run and scale up based on demand.

Day 1, day 2 & day 14 development

CassIO is a DataStax project created with Google with the intention of making it easier to integrate the Apache Cassandra database with generative AI tools (such as LangChain and LlamaIndex) so that now, CassIO provides a way to use the scale and performance of Cassandra with generative AI applications, from hosting the transaction data created by applications through to operating as a feature store for predictive AI and now as a vector database. This multi-modal approach means that developers can scale up around data as much as they require.

“LangStream integrates with LLMs from OpenAI, Google Vertex AI and Hugging Face alongside Vector databases like Pinecone and DataStax, plus Python libraries like LangChain and LlamaIndex. What we do with this technology is work with developers to help them work out a) what they want and b) what they need to do in the process of building generative AI with these technologies. Although LangChain came about, it did not provide quite the right guidance on the ‘how factor’ behind how to run an event-driven architecture… and so this is why DataStax developed LangStream,” explained Parkey.

She reminds us that there’s day-1 development, but we need to think about the challenges developers might have in their day-2 deployment scenarios… and we can also think about day-14 when a developer might need to really deconstruct how a piece of technology works in order to really understand its operational style, structure and substance.

Explicit & implicit memories

An AI purist and theorist at heart, Parkey clarified the mechanics of human brain memory and understanding before discussing how we might take the basic split that plays out here forward into the way we build our AI architectures. For us humans, there are two core types of memory:

- An explicit memory is a memory of an event, like a birthday.

- An implicit memory is knowledge of a thing, place, entity or whatever.

We can now apply additional implicit knowledge to the data intelligence in a database in order to really understand what is going on. RAG is a form of explicit knowledge because it adds contextual context.

“LangStream integrates LLMs to make that explicit & implicit connection and it might typically combine (let’s say) what could be five LLMs to combine knowledge and understand more of the whole contextual intent behind a query – so, for example, if we wanted to build a new decking section in our back yard or garden, the model could provide a list of equipment and products needed to perform an action like that — and then also accommodate for important governance aspects such as housing regulations and so on,” said Parkey, in a move to give us tangible explanatory context.

Overall impression

These events – and the companies that are ‘brave’ enough to put them on – provide so much more proximity to the ethos, mindset, personality and technical breadth that a company has to offer. When an enterprise software organisation welcomes you into its offices, allows you to walk past its employees at their desks and offers you the chance to eat sandwiches with the staff in the canteen, then anyone fortunate enough to get that experience is able to see how a technology business really works.

It is, if you will, pretty much the opposite of a formalised conference or convention.

Did we eat sandwiches with DataStax? Well no, this was Silicon Valley after all and they’re much too healthy for all those carbs. We had the fish taco salad bowl with extra banana peppers and a blue raspberry sparkling water, obviously. Thanks for opening your doors and for your openness DataStax, we feel we know you better now.

Dr. Charna Parkey, real-time AI product & strategy leader, DataStax.