Data engineering - Nooks: Standardising & measuring data to run AI assistants

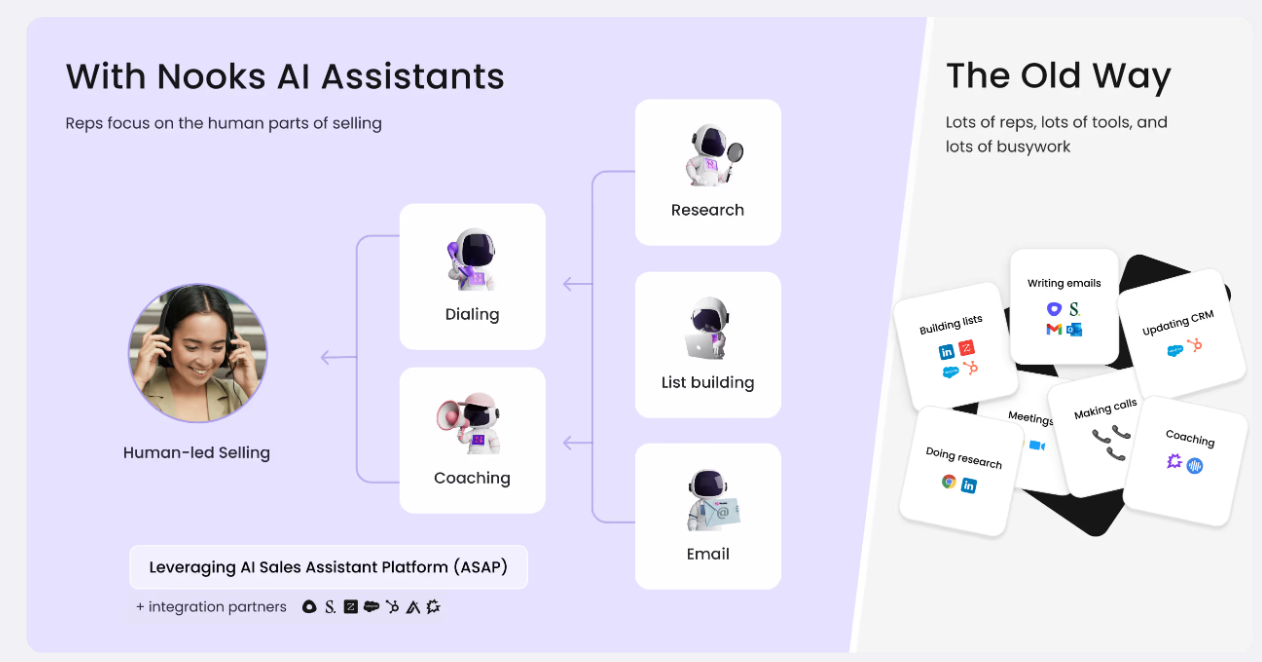

This is a guest post for the Computer Weekly Developer Network written by Nikhil Cheerla in his role as co-founder & CTO of Nooks, a company that specialises in the creation of an AI sales assistant platform for sales teams.

Cheerla writes in full as follows…

Data is at the heart of every successful application. It’s especially vital when building AI assistants that make people’s work more efficient. After all, a great assistant understands how to complement and support human actions…. and that requires a rich set of data to learn from.

But not all data is created equal – and not all of it plays nicely together.

For AI to perform at its best, especially in applications like sales assistants, the data flowing into these systems needs to be clean, reliable and actionable. That’s where the two toughest challenges come into play: standardisation and measurement.

Why standardisation is hard (and essential)

When you’re working with data from multiple sources – CRMs, sales engagement platforms, call transcripts and even web scrapes – it’s messy. Every system has its quirks. A Salesforce database might store customer contact data one way, while HubSpot handles it completely differently. Let’s not even get started on web data, which is often ad hoc and unstructured.

The stakes are high.

Imagine a sales assistant providing a rep with an outdated contact history or failing to surface critical details from a previous call because a CRM field wasn’t filled out correctly. That’s not just frustrating – it can undermine the trust users have in AI.

So, how do you tackle this?

We use automated monitoring jobs to keep tabs on data quality. These jobs flag issues like missing fields or data that hasn’t been updated recently, allowing the application to adapt its recommendations. We also use flexible storage solutions like JSON blobs to handle unstructured data. This way, we’re not locked into rigid schemas and can adapt as customer needs evolve.

… and then, measurement

If standardisation is about getting your data in order, measurement is about knowing whether the insights your AI generates are actually working. Let’s say your AI flags a renewal opportunity for a sales rep. If that opportunity doesn’t pan out, was the issue bad data?

Or was the AI’s interpretation of the data flawed? Figuring that out quickly is key to improving performance.

We solve this by building internal tools that make debugging signals easy through “data citations”.

These tools let us trace insights back to their source, whether it’s a CRM entry, a call transcript, or a model prediction.

For example, let’s say a salesperson made a note in the CRM that their prospect would like a follow-up in six months. But then the AI spotted an update by the prospect on LinkedIn that suggested that the need is more urgent. Understanding how to prioritise the AI’s recommendations and learn from the situation is critical.

If something doesn’t look right, we can pinpoint whether the issue is with the data itself or with how the AI processed it. This not only helps our engineers but also gives sales leaders and other users confidence in the AI’s decisions.

Turning data into signals

One way we streamline data for AI is by converting raw inputs into what we call signals. Signals are high-level insights – like “this prospect expressed interest in a competitor” or “this lead has a renewal coming up soon” and so on. Instead of sifting through raw data, our system presents these pre-computed signals, making it easier for users to take action.

For example, when a sales rep is preparing to call a prospect, the assistant might highlight signals like recent job changes, prior engagement history, or competitive mentions – all compiled from multiple data sources. These signals are constantly updated in the background when any of the underlying data changes, ensuring they’re always fresh and relevant.

Mastering data challenges

AI assistants are only as good as the data they work with.

If the inputs are inconsistent or the outputs aren’t reliable, the entire system falls apart. By prioritising standardisation and measurement, we ensure our AI assistants provide sales teams with actionable insights they can trust.

At the end of the day, this is about making AI useful.

Whether it’s automating repetitive tasks or helping sales reps have better conversations, the combination of clean data and clear measurements makes all the difference. And as AI continues to evolve, these foundational practices will remain essential for any organisation looking to harness its full potential.