Confluent boosts Apache Flink with new developer tools

Data streaming platform and tools specialist Confluent used its flagship developer, data scientist, systems architect and administrator (other software engineering and data science positions are also available) conference ‘Current 2024’ to detail new capabilities that now feature in its Confluent Cloud fully managed cloud service for Apache Kafka.

With the volume and variety of real-time data increasing, the company says it has worked specifically to make stream processing and data streaming more accessible and secure.

Much of the tooling here is aligned with Apache Flink, a technology designed to support ‘stateful computations’ over data streams.

By way of additional clarification, in the use of stateful applications or data, a denoted server logs and tracks the state of each entity in the process, session or user interaction and maintains information about that user (or machine) interaction and its past requests. Apache Flink is a framework and distributed processing engine for stateful computations over unbounded and bounded data streams. As noted by Secoda, a bounded stream has a defined start and end, so an entire data set can be ingested before starting any computation – while conversely, an unbounded stream has a start but no end, requiring continuous processing of data as it is generated.

Confluent’s new support of Table API makes Apache Flink available to Java and Python developers – Table API is a unified, relational API for stream and batch processing where Table API queries can be run on batch or streaming input without modifications.

Also here we can see that Confluent’s private networking for Flink provides enterprise-level protection for use cases with sensitive data; Confluent Extension for Visual Studio Code accelerates the development of real-time use cases; and Client-Side Field Level Encryption encrypts sensitive data for stronger security and privacy.

Instant app analysis & response

According to Stewart Bond, research vice president at IDC, the true strength of using Apache Flink for stream processing empowers developers to create applications that instantly analyse and respond to real-time data, significantly enhancing responsiveness and user experience.

“Managed Apache Flink solutions can eliminate the complexities of infrastructure management while saving time and resources. Businesses must look for a Flink solution that seamlessly integrates with the tools, programming languages and data formats they’re already using for easy implementation into business workflows,” said Bond.

One wonders which ‘seamlessly integrated Apache Flink solution’ Bond thinks businesses must look for first, right? Confluent has been named a leader in the IDC MarketScape of Worldwide Analytic Stream Processing Software 2024 Vendor Assessment, so it’s not too hard to work it out.

Why is stream processing mushrooming?

More businesses are relying on stream processing to build real-time applications and pipelines for various use cases spanning machine learning, predictive maintenance, personalised recommendations and fraud detection. In terms of real world deployments, stream processing generally enables organisations to ‘blend and enrich’ their data with information across their business.

Shaun Clowes, chief product officer at Confluent reminds us that Apache Flink is the de facto standard for stream processing. However, he says, many teams hit roadblocks with Flink because it’s operationally complex, difficult to secure and has expensive infrastructure and management costs.

“Thousands of teams worldwide use Apache Flink as their trusted steam processing solution to deliver exceptional customer experiences and streamline operations by shifting processing closer to the source, where data is fresh and clean,” said Clowes. “Our latest innovations push the boundaries further, making it easier for developers of all skill levels to harness this powerful technology for even more mission-critical and complex use cases.”

Looking deeper into the product news on offer here, Confluent Cloud for Apache Flink offers the SQL API, a user-friendly tool for processing data streams. While Flink SQL is effective for quickly writing and executing queries, some teams favour programming languages like Java or Python which allow for more control of their applications and data. This can be especially important when developing complex business logic or custom processing tasks.

Confluent serves up Table API

The company says that adding support for the Table API to Confluent Cloud for Apache Flink enables Java or Python developers to easily create streaming applications using familiar tools. By supporting both Flink SQL and the Table API, Confluent Cloud for Apache Flink lets developers choose the best language for their use cases.

The company says that adding support for the Table API to Confluent Cloud for Apache Flink enables Java or Python developers to easily create streaming applications using familiar tools. By supporting both Flink SQL and the Table API, Confluent Cloud for Apache Flink lets developers choose the best language for their use cases.

Support for Table API enables software and data teams to enhance language flexibility by enabling developers to use their preferred programming languages, taking advantage of language-specific features and custom operations. It also streamlines the coding process by using a team’s Integrated Development Environment (IDE) of choice featuring auto-completion, refactoring tools and compile-time checks to ensure higher code quality and minimise runtime issues. Debugging is made easier with an iterative approach to data processing and streamlined CI/CD integration.

Support for Table API is available in open preview and is available for testing and experimentation purposes. General availability is coming soon.

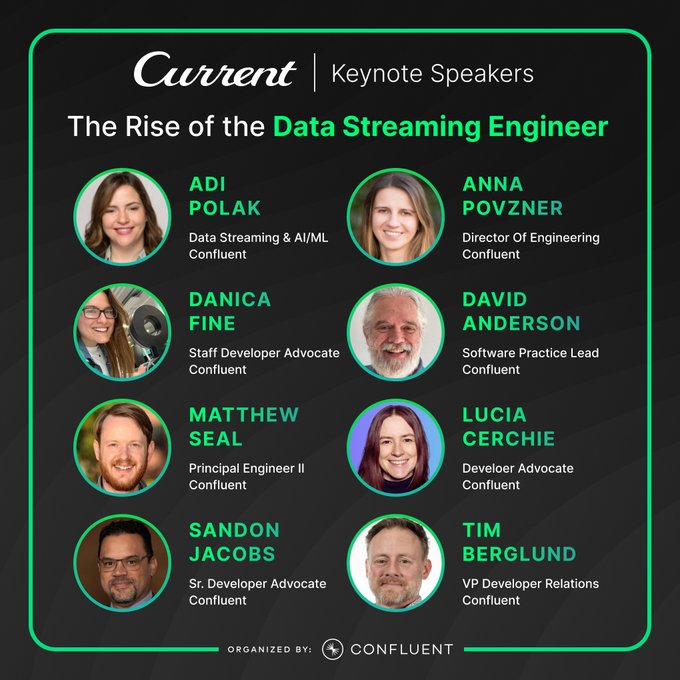

Confluent brought this announcement forward at its annual user conference Current 2024, held this year in Austin Texas.