CI/CD series - Tibco: Continuous self-service intelligence with Kafka DataOps

This is a guest post for the Computer Weekly Developer Network in our Continuous Integration (CI) & Continuous Delivery (CD) series.

This contribution is written by Mark Palmer in his capacity as SVP of data, analytics and data science at TIBCO Software & Steven Warwick in his capacity as principal software engineer at TIBCO Software — the company is a specialist in integration, API management and analytics.

Palmer & Warwick write…

Developers love Kafka because it makes it easy to integrate software systems with real-time data. But to business users, Kafka can seem like a cable TV station you haven’t subscribed to i.e. insights are buried within Kafka messages because they aren’t easily accessible.

Recently, developments in analytics and Kafka DataOps have made Kafka a first-class citizen for Business Intelligence (BI) and Artificial Intelligence (AI), for the first time. Better yet, it can now take just a few minutes — and better yet still, the insights are real time, so the information gathered is well suited to CI/CD implementations.

Here’s how DataOps for Kafka works and how continuous intelligence with Kafka can change how business users think about data.

Streaming Business Intelligence

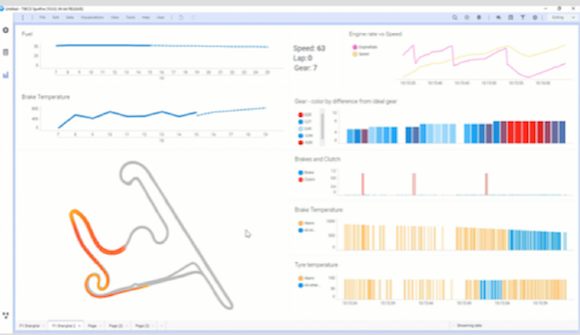

Streaming BI is a new class of technology that connects Kafka streaming data to self-service business intelligence. For the first time, Kafka business analyst blindness can be cured with visual analytics. Here’s how it works. Streaming BI supports native connectivity for Kafka topics. It takes just a few minutes to set up: you open a Kafka topic like you would open an Excel spreadsheet. Once connected, a Kafka channel looks like any other database table, except it’s live and continuously updated in the BI tool. The business analysis selects the topics they want to explore, creates visualisations and links views.

Each visualisation creates queries that are submitted to the streaming BI engine to continuously evaluate messages on Kafka as conditions change. When any element in the result set changes, the incremental change is pushed to the BI tool.

Streaming BI looks like traditional BI, but all the data is alive! Graphs update in real-time. Alerts fire. Continuous insight on Kafka data is now at the fingertips of any business analyst, with no programming, no hacking KSQL, without weeks or months of development from IT.

Because streaming BI is built on an advanced streaming analytics engine, analysts can decide to create arbitrary sliding windows based on temporal constraints, join and merge streams with each other or even apply machine learning models in real-time.

Adaptive data science & Kafka

This same streaming technology can power adaptive data science too.

So what is adaptive data science? Traditional machine learning trains models based on historical data. This predictive intelligence works well for applications where the world essentially stays the same — that the same patterns, anomalies and mechanisms observed in the past will happen in the future. So, predictive analytics is really looking to the past, rather than the future.

But in many real-world situations, conditions do change and with the increasing ubiquity of sensors, Kafka is like an Enterprise Nervous System, where ‘sensory input’ from Kafka can be continuously used to score data science models. When you inject an AI model into the streaming BI server predictions continually update as conditions change. Java, PMML, R, H2O, Tensorflow and Python models can all be executed against sliding windows of Kafka messages.

Continuous Intelligence

Combined, streaming BI and AI provides continuous intelligence for Kafka. This style of analytics flips the traditional backwards-looking model of processing on its head: in effect, business analysts can now query and predict the future. This style of insight exploration brings BI, for the first time, to true real-time use cases in operational business applications that use Kafka.

Here’s how to think about how to use Kafka for continuous intelligence. First, imagine the data you might have on Kafka that changes frequently: sales leads, transactions, connected vehicles, mobile apps, wearable devices, social media updates, customer calls, robotic device state changes, kiosk interactions, social media activity, website hits, customer orders, chat messages, supply chain updates and file exchanges.

Next, think of questions about the topics on your Kafka streams that start with ‘tell me when’. These questions can contain mathematics, rules, or a machine learning model and can be answered millions or billions of times a day. When answered, your BI tool will call you; you don’t have to sit around and wait.

These are questions about the future: Tell me when a high-value customer walks in my store. Tell me when a piece of equipment shows signs of failure for more than 30 seconds. Tell me when a plane is about to land with a high-priority passenger aboard at risk of missing their connection.

Most companies don’t bother asking ‘tell me when’ questions because, well, their BI and AI tools couldn’t answer them.

Until now.

Continuous Kafka

Continuous Intelligence with Kafka enables entirely new use cases and applications that leverage real-time data.

Bank risk officers can detect anomalies within streams of Kafka messages that carry trades, orders, market data, client activity, account activity and risk metrics to identify suspicious trading activity, trading opportunities and assess compliance in real-time.

Supply chain, logistics and transportation firms can analyse streaming data on Kafka to monitor and map connected vehicles, containers and people in real time. Streaming BI helps analysts optimize deliveries by identifying the most impactful routing problems.

Smart City operational analysts can monitor Kafka data from GPS, traffic data, buses and trains to help them predict and act to dangerous conditions before they cause harm.

Energy companies can analyze sensor-enabled industrial equipment to avoid oil production problems before they happen and predict when to perform maintenance on equipment. ConocoPhillips says that these systems could lead to “billions and billions of dollars” in savings.

Why not use KSQL?

KSQL allows developers to query Kafka data, but it’s not real-time and continuous, BI tools don’t support it and it requires developers to implement a system with it. For example, BI tools like Power BI, Tableau and Looker don’t support it because they aren’t built for streaming BI—they do not accept continuous push-based, incremental query processing.

So using KSQL for analytics is like buying a hammer and expecting to get a house as a result – there’s a lot more to it.

Streaming BI instantly makes Kafka useful by allowing users to connect to professional BI tools and data science models without KSQL coding. No need to build a house – you just move in! Connect Kafka to your BI tool with the click of a button and prepare to experience a continuously live, immersive BI experience.

The other approach is to simply stuff Kafka messages into a database and use SQL. This works if all you want to do is to look in the rear-view mirror at what has already happened.

Fusing Continuous Intelligence & History

There’s just one more thing.

For the first time, Streaming BI helps you combine real-time insight with historical insight, which lets analysts to compare real-time conditions to what has already happened. This is analytics nirvana.

For example, airline operations might use Kafka to capture data about frequent flyers as they check in for flights and by using streaming BI, analysts can correlate continuous awareness about customer history as they consider how to take action in real-time.

Streaming BI and adaptive data science can turn how you think about using Kafka on its head. With continuous intelligence, business users can now have self-service access to Kafka events, apply AI in real-time and understand what’s happening right now. Even better, they can query the future and make more accurate and timely predictions and decisions.