Remember what it felt like when you got your first solid state drive (SSD)? Back in 2008, laptops with a hard disk drive (HDD) felt unresponsive – they lagged and nearly ground to a halt when the virus scanner started accessing the HDD. Then Intel launched the X-25M SSD and laptops felt much more responsive. Gone was the lag. Nobody noticed when the virus scanner ran.

Those old systems suffered for the gap in memory and storage hierarchy. The difference in latency between the DRAM and the HDD was humongous – between 80ns (nanoseconds) and 3ms (milliseconds), wasting CPU cycles waiting for data. Then along came the 50 microsecond NAND SSD to save the day. Memory and storage hierarchy problems were fixed, and users felt it.

Fast forward to today, and we find ourselves again in need of a new technology in the hierarchy. NAND SSDs are great, but their latency is actually slightly longer than when they first arrived - a compromise to allow them to hold more data. And CPUs have continued to increase in performance. So, data on the NAND SSD is further away from the CPU than it used to be. This time, this is most acutely felt in the datacentre where data set sizes are increasing at an astounding rate of doubling every three years.

Filling the gaps

Intel® Optane™ and 3D NAND technology are filling these new gaps to make the memory and storage hierarchy complete. But we still see performance and capacity gaps emerging as time passes.

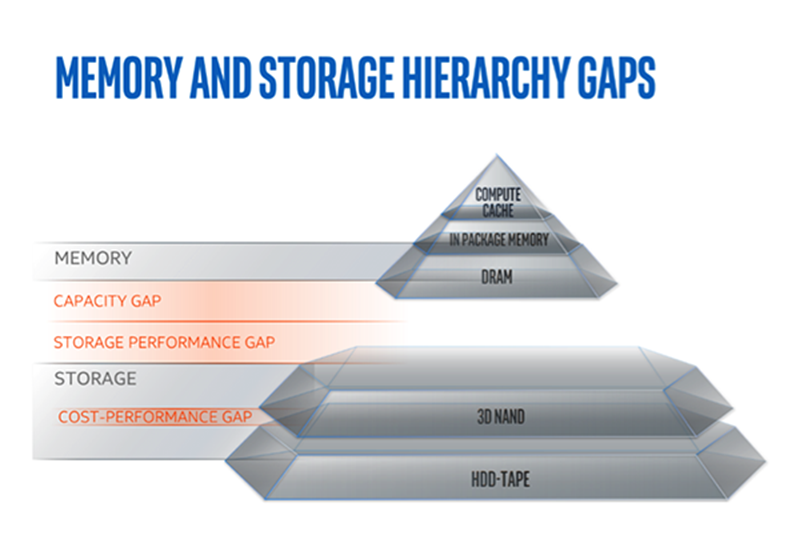

Think of the memory and storage hierarchy – it has the CPU at the top, with DRAM below, then NAND, HDD and tape at the bottom. As you move from bottom to top, each layer of the hierarchy holds progressively “hotter” data, making that data more rapidly accessible to the processor. Following the 90/10 rule – that is, 10% of the data is accessed 90% of the time – we would expect each layer to be 10 times the capacity of the layer above, but only one-tenth the performance. The system moves more-frequently accessed data up the hierarchy to be closer to the CPU, and less-frequently accessed data moves down.

So why are there still gaps emerging? These gaps result from the mismatches between the increasing needs for compute on growing amounts of data, versus the trends in the underlying memory technologies. Remember that data is doubling every three years — an incredible pace. But the capacity per die of DRAM is only doubling every four years. This mismatch means we can’t store as much data in DRAM – which is close to the processor in terms of latency - as we would like. Therefore, we have a gap just under DRAM — a memory capacity gap.

Moreover, we find that NAND, while it is increasing its capacity at a fast-enough rate (doubling every two years), has a relatively constant latency over time. So as the CPU gets faster, data in NAND SSDs appears further away. This creates another gap between DRAM and NAND SSDs, and makes another storage performance gap.

To further complicate matters, memory technologies tend to increase in capacity more than they increase in throughput. This means that bandwidth per capacity decreases over time, increasing the time required to access big data sets at any particular layer of the hierarchy. This reinforces the gaps.

Achieving Transformative Application Performance with Vexata Systems and Intel® Xeon® Processors and Intel® SSDs

Read this brief to learn how Vexata and Intel have collaborated to solve the digital transformation challenges using a solutions-based approach that brings the best out of applications running on Intel processors using Vexata storage systems.

Download NowBringing more storage closer to the CPU

Filling these two gaps requires a new technology, one with a higher capacity at lower cost than DRAM, but it does not need to have DRAM levels of performance (latency and throughput). Since this new memory must also appear as storage, it also needs to be persistent across power cycles. This new memory is Intel® 3D XPoint™ Media, placed into the system in both Optane™ SSDs and Optane™ DC Persistent Memory.

Intel® Optane™ SSDs have latencies of about 10 microseconds, roughly one-tenth that of a NAND SSD. Better yet, they reliably return data quickly, much more so than NAND SSDs. This means Intel® Optane™ SSDs fill part of the gap described, bringing more storage closer to the CPU.

Intel® Optane™ DC Persistent Memory makes memory accessible directly through load-store instructions, which don’t require operating system intervention. They also allow single cache line accesses. Data can be accessed in hundreds of nanoseconds – about 100ns to 340ns depending on DRAM cache hit, to be specific. So as persistent memory, Intel® Optane™ media fills the other gap in the hierarchy.

NAND, which doubles its capacity every two years, is able to store some of the data currently stored in HDDs, moving that data closer to the processor. Intel® 3D NAND is leading this capacity per silicon area charge.

What does this all mean? It means the datacentre that is currently struggling with too much data too far from the CPU, just like we struggled in 2008 on our laptop, is catching a big break. Advances in memory technologies and the system technologies needed to make them useful are delivering a game changer a lot like the first SSDs. Intel is working to complete the hierarchy to help make datacentres feel the improvement as well.

This article is adapted from an original blog post by Intel Fellow Frank Hady.