voyager624 - Fotolia

Disaster recovery planning: Where virtualisation can help

The disaster recovery planning process is not fundamentally technology-centric, so when can virtualisation make it quicker and easier to restore services after an unplanned outage?

Virtualisation has revolutionised the way we deploy applications in the datacentre, and arguably that stretches to disaster recovery.

Server provisioning that previously took weeks or months is transformed into an automated task completed in minutes. Virtualisation delivers agility, flexibility and greater resiliency through features such as snapshots, vMotion and HA/FT (high availability/fault tolerance).

Meanwhile, disaster recovery is also transformed. In physical server environments, the process of recovery from an unplanned outage required failover to a replica of the primary environment or identical hardware and operating systems to which backups could be restored.

There are claims that virtualisation does away with many of these processes and makes disaster recovery easier and simpler to deploy, but to what extent is that the case?

In this article, we will examine each phase in the disaster recover planning and provisioning process and assess to what extent virtualisation can help.

Comparing physical to virtual

Server virtualisation is a great tool to consolidate and simplify the deployment of application workloads. Where hardware was underutilised – typically with a single application per operating system instance – virtualisation has provided the isolation and management benefits of the server while concentrating the physical estate into a much more efficient footprint.

Virtual servers are a combination of virtual disk files that represent the physical disk, plus configuration information for processors, memory and other attached devices. This makes the virtual server – or virtual machine (VM) – highly portable, and allows virtualisation to provide capabilities such as high availability (bringing a VM up on another server after a hardware failure) and fault tolerance (running a ghost image of a VM that takes over services if hardware fails), without lots of additional hardware or complex configurations.

The ability to treat a VM as a set of files means that backup and recovery is also much simpler. The hardware on which the VM runs can vary (within limits), making it the job of the hypervisor to translate physical to virtual devices. This means the VM and its encapsulated workload is more portable than ever.

Disaster recovery planning and execution

Let’s look at the key elements of a typical disaster recovery plan and see where virtualisation technology can help.

The first step in disaster recovery planning is to look at the business requirements and match applications to service-level objectives. In the disaster recovery realm, the standard measurements are recovery time objective (RTO) and recovery point objective (RPO).

RTO specifies the amount of outage time that can be tolerated by the application before services must be resumed. Mission-critical applications have low, or even zero, RTOs (indicating services must continue at all times).

RPO describes the amount of data loss that can be tolerated by an application. This may be zero (ie, no data loss) or measured in minutes or hours. Some non-core apps (like those used for reporting) may be able to tolerate an RPO of up to 24 hours, especially where data can be generated from other sources.

At this point, choice of technology has no bearing. Carrying out a business impact/risk analysis is based on human assessments of the requirements of the business. But as we progress further into the disaster recovery planning process, we will find technology choices arise. And the question becomes, where can virtualisation help with DR?

Disaster recovery risk assessment

The next step in the disaster recovery planning process is to take the service requirements derived from an impact analysis and carry out a risk assessment.

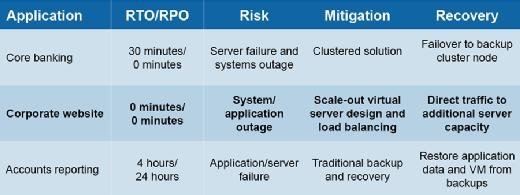

For each application or system, we can map the RTO/RPO requirements to likely risks, assess the likelihood of those risks and start to develop mitigation and recovery strategies for each one. The following table shows some examples:

At this point we can see that choices can be made between physical and virtual infrastructure.

The first example shows how a clustered solution based on physical hardware could be used to deliver on service requirements. The application can tolerate an outage of up to 30 minutes, although no data loss is acceptable.

This could be delivered from a – very costly – mirrored physical infrastructure with failover, or a virtual one, using high availability, such as VMware HA. This functionality automates the restart of an application onto secondary hardware, which can use shared storage infrastructure to ensure an RPO of zero.

The second example shows a corporate website that needs to be available 24/7 with no downtime. In this instance, the application is based on static data and therefore can be delivered from one or more web server instances that all access the same data pool. If any server is lost, load-balancing software redirects traffic to a new instance.

Virtualisation can help in this scenario by providing web server instances in separate VMs. If a hardware failure is experienced, a new web server can be deployed from a template and added to the load balancing list without the need for more complex HA or clustering software. This solution could also be delivered across multiple geographic locations.

The third example highlights how a traditional application could be protected with traditional or VM-based backup. A virtual solution may provide a faster capability for backup and restore than using a physical infrastructure.

Building a disaster recovery plan

Now we have identified our applications and quantified the associated risks, we can start to fully map out the mitigation and recovery scenarios as part of an application and infrastructure design. Virtualisation provides some unique properties that can help achieve business continuity compared with purely physical server operations. These include:

- The ability to spin up new VM instances within minutes, based on templated application workloads.

- Application recovery through fault tolerance and high availability that removes the requirement for complex recovery solutions, including metro locations.

- Integration and automation of VM failover to remote locations using tools such as VMware’s Site Recovery Manager.

- Abstraction from the hardware that allows VMs to be recovered on unlike hardware that can be of lower or higher specification or consolidated compared with the production site.

- VM/server backup based on file-image copies from the underlying storage.

- Integration of failover with the application through the use of host-based tools to avoid crash-consistent copies and higher chances of application recovery.

- Disaster avoidance through tools such as vMotion.

All these features allow the application to be deployed on infrastructure in more efficient ways than can typically be achieved with physical servers.

Testing and validation

After design comes the need to test and validate the disaster recovery plan. Whether using virtual infrastructure or not, the plan must include the ability to validate that applications can be run in disaster recovery mode and returned to normal operations within the terms of the service level objectives for each system (RPO/RTO).

Virtualisation doesn’t avoid testing (and confirming each part of the infrastructure is configured correctly), but it can make the testing process simpler to achieve. It is much easier, for example, to bring up a VM at a disaster recovery site and test functionality and data integrity while keeping the VM isolated to avoid clashing with the live production environment. This can be achieved without impacting the disaster recovery process, whereas testing with physical servers puts the production service at risk until testing is over.

Summary

Virtualisation provides many opportunities to implement disaster recovery in a more efficient and simple way. But, as we have seen, it is not a replacement for having a well thought-out, detailed and comprehensive disaster recovery plan, based on the requirements of the business. As technology continues to evolve, the disaster recovery plan will require reviews and updates to reflect current virtualisation capabilities and so becomes a “living” document to ensure ongoing business continuity.

Read more about disaster recovery and virtualisation

- We run the rule over the key functionality in Microsoft Hyper-V that can help with disaster recovery, including backup, migration, high availability and replication.

- We run the rule over the key areas of functionality in VMware that can help with disaster recovery, including VMware backup, migration, high availability and replication.