IBM open source ‘anti-bias’ AI tool to combat racist robot fear factor

Developers using Artificial Intelligence (AI) ‘engines’ to automate any degree of decision making inside their applications will want to ensure that the brain behind that AI is free from bias and unfair influence.

Built with open source DNA, IBM’s new Watson ‘trust and transparency’ software service claims to be able to automatically detects bias.

No more ‘racist robots’ then, as one creative subheadline writer suggested?

Well it’s early days still, but (arguably) the effort here is at least focused in the right direction.

IBM Research will release to open source an AI bias detection and mitigation toolkit

Fear factor

Big Blue’s GM for Watson AI Beth Smith has pointed to the fear factor that exists across the business world when it comes to its use of applications that incorporate AI.

She highlights research by IBM’s Institute for Business Value, which suggests that while 82 percent of enterprises are considering AI deployments, 60 percent fear liability issues and 63 percent lack the in-house talent to confidently manage the technology.

So how does it work?

IBM says that its new cloud-based trust and transparency capabilities work with models built from a wide variety of machine learning frameworks and AI-build environments such as Watson, Tensorflow, SparkML, AWS SageMaker and AzureML.

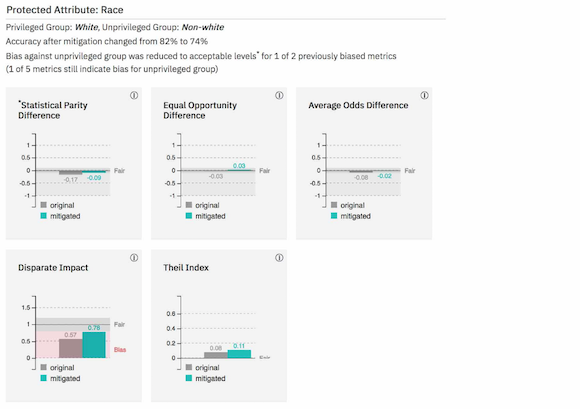

This automated software service is designed to detects bias (in so far as it can) in AI models at runtime as decisions are being made. More interestingly, it also automatically recommends data to add to the model to help mitigate any bias it has detected… so we should (logically) get better at this as we go forward.

For the user, explanations are provided in easy to understand terms, showing which factors weighted the decision in one direction vs. another, the confidence in the recommendation, and the factors behind that confidence.

AI Fairness toolkit

In addition, IBM Research is making available to the open source community the AI Fairness 360 toolkit – a library of algorithms, code and tutorials for data scientists to integrate bias detection as they build and deploy machine learning models.

According to an IBM press statement, “While other open-source resources have focused solely on checking for bias in training data, the IBM AI Fairness 360 toolkit created by IBM Research will help check for and mitigate bias in AI models. It invites the global open source community to work together to advance the science and make it easier to address bias in AI.”

It’s good, it’s broad, but it is (very arguably) still not perfect is it? But then (equally arguably) neither are we humans.

Image source: IBM